Develop Machine Learning Models with Jupyter Notebooks

This section requires a Practicus AI Cloud Worker. Please visit the introduction to Cloud Workers section of this tutorial to learn more.

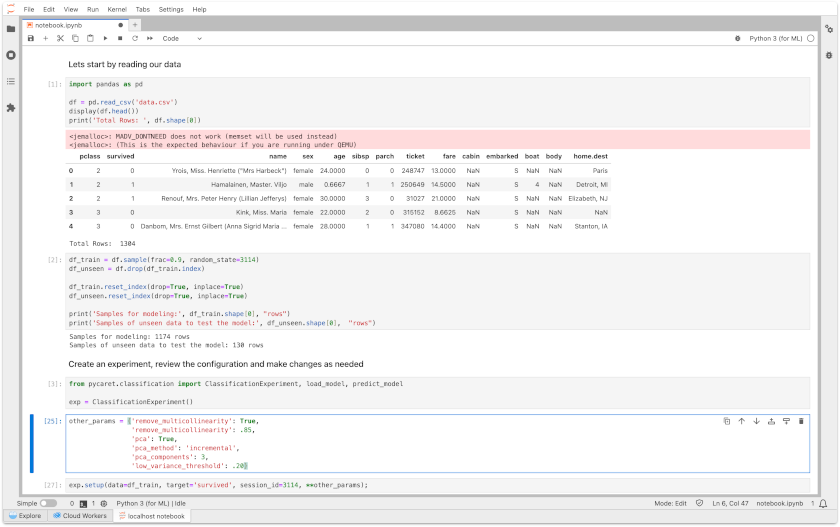

This section will provide information on how technical and non-technical users can easily intervene in the code and improve the machine learning models they build.

You have set up the model and everything is fine. You can either complete the model at this point and save it, or you can easily intervene in the generated Jupyter Notebook code to improve the model.

- Open Explore tab

- Make sure a Cloud Worker is selected (upper right)

- Select Cloud Worker Files and open the file below

- Home > samples > titanic.csv

- Select Model > Predictive Objective

- Choose Objective Column as Survived and Technique should be Classifications

- Click OK

- After the model build is completed you will see a dialog

- Select Open Jupyter Notebook to experiment further

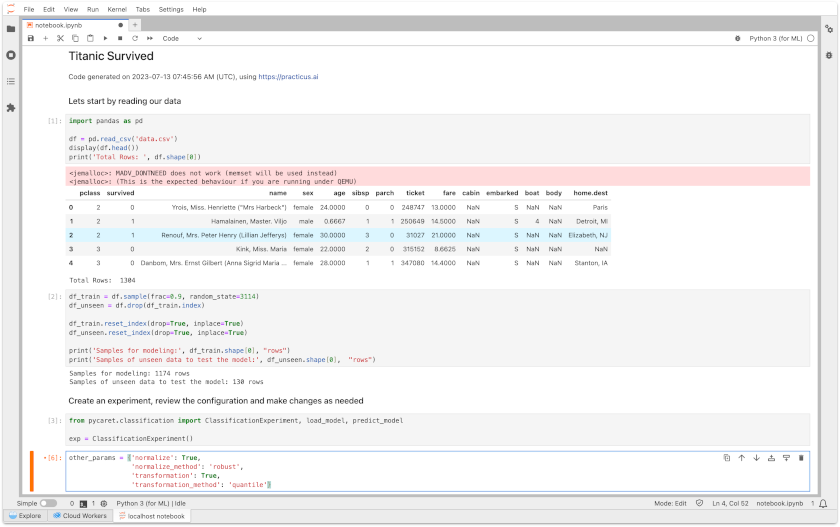

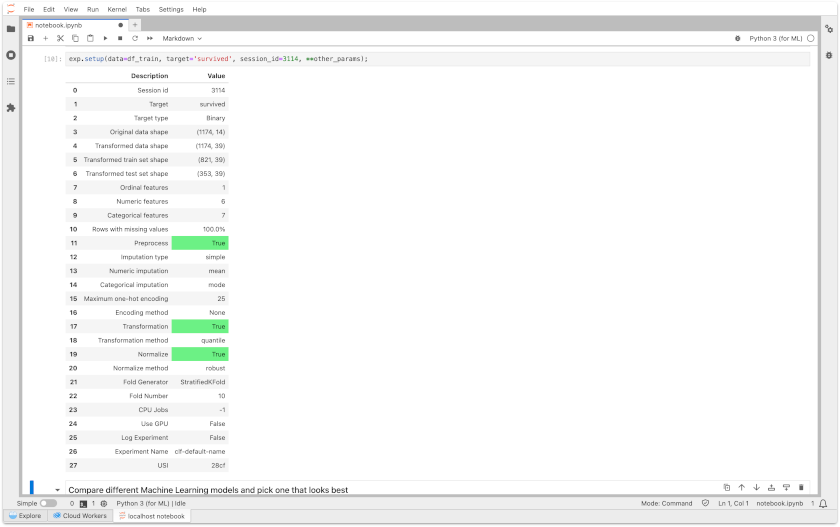

Scale and Transform

In the Scale and Transform section, you can rescale the values of numeric columns in the dataset without distorting differences in the ranges of values or losing information.

Parameters for Normalization in shortly:

- You must first set normalize to True

- You can choose z-score, minmax, maxabs, or robust methods for normalize

Parameters for Feature Transform in shortly:

- You must first set normalize to True

- You can choose yeo-johnson or quantile methods.

- For the Target Transform you can choose yeo-johnson or quantile methods

If you want to have deeper knowledge for Scale and Transform, you can review this link

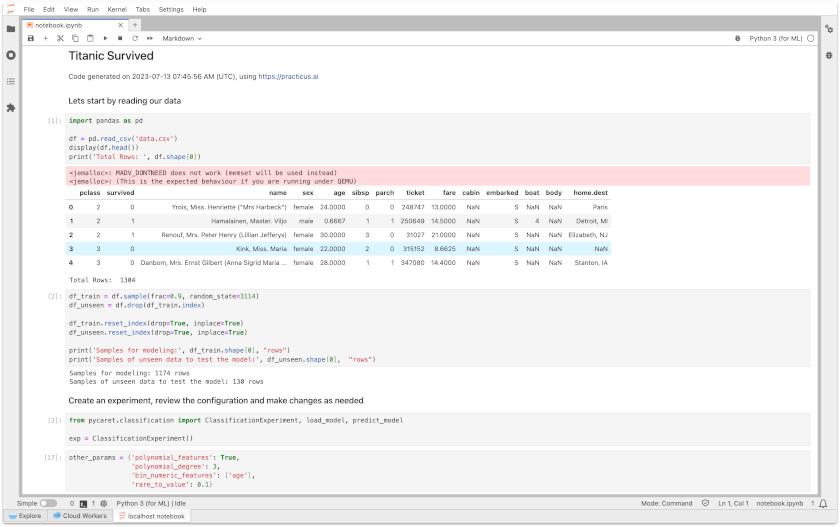

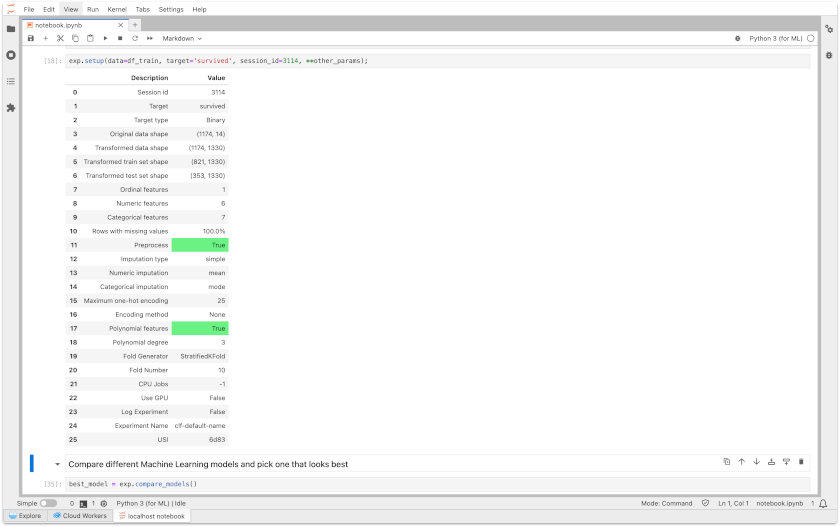

Feature Engineering

In the Feature Engineering section, you can automatically try new variables and have them improve the accuracy of the model. You can also apply rare encoding to data with low frequency

Parameters for Polynomial Features in shortly:

- You must first set polynomial_features to True

- polynomial_degree should be int

Parameters for Bin Numeric Features in shortly:

- bin_numeric_features should be list

Parameters for Combine Rare Levels in shortly:

- rare_to_value: float, default=None

- rare_value: default='rare'

If you want to have deeper knowledge for Feature Engineering, you can review this link

Feature Selection

In the Feature Selection section, you can set which variables to include in the model and exclude some variables based on the relationships between the variables.

Parameters for Remove Multicollinearity in shortly:

- You must first set remove_multicollinearity to True

- multicollinearity_threshold should be float

Parameters for Principal Component Analysis in shortly:

- You must first set pca to True

- pca_method should be linear, kernel, or incremental

- pca_components should be None, int, float, mle

- Hint: Minka’s MLE is used to guess the dimension (ony for pca_method='linear')

Parameters for Ignore Low Variance in shortly:

- low_variance_threshold should be float or None.

If you want to have deeper knowledge for Feature Selection, you can review this link